Stop Fixing CI Failures Manually: Build an AI Agent with Dagger

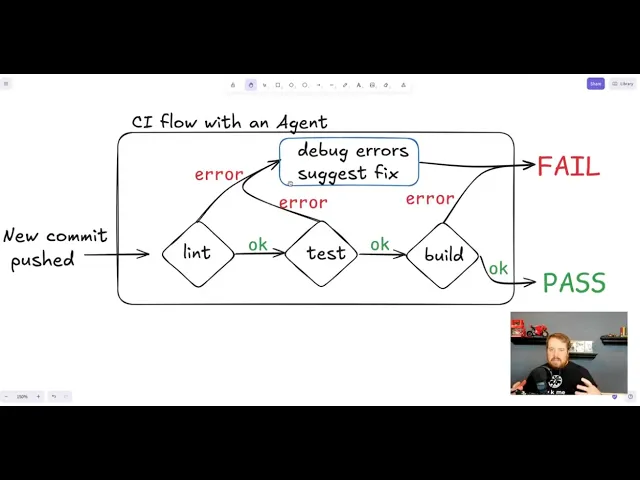

What if your CI pipeline didn't just tell you what broke, but actually fixed it for you? Imagine pushing a change, seeing a lint error pop up, and moments later, finding a ready-to-commit suggestion right there in your pull request, correcting the mistake. This isn't science fiction; it's achievable today by combining the power of AI agents with the composability of Dagger.

The Familiar Pain of CI Failures

Continuous Integration (CI) systems are the vigilant gatekeepers of our codebases. They run linters, execute tests, perform security scans, and generally ensure that incoming changes meet the project's standards. We rely on them to catch mistakes before they merge.

However, when CI flags an issue like a pesky linting violation or a failing test, the burden shifts back to the developer. Even when the error message is clear, the process is often tedious:

1. Read & Understand: Decipher the CI output to pinpoint the exact problem.

2. Context Switch: Pull the latest changes, switch back to your editor.

3. Implement Fix: Make the necessary code adjustments.

4. Commit & Push: Stage the changes and push them back up.

5. Wait: Watch the CI pipeline run again, hoping for green checks.

This cycle consumes valuable time and interrupts development flow. What if we could automate the entire process, directly within CI?

Solution: An AI Agent for Automated Fixes

This post explores an AI agent built with Dagger that automatically addresses linting and test failures within a CI environment. When failures are detected in a pull request, this agent:

Analyzes the failure output.

Uses tools to interact with the project's codebase and test suite.

Iteratively attempts fixes and re-runs tests/linters to validate them.

Generates a code diff containing the validated fixes.

Posts these fixes as code suggestions directly on the pull request, allowing developers to review and accept them with a single click.

Technical Deep Dive: Building the Agent with Dagger

We could try simply feeding the entire project source and the failure log to a Large Language Model (LLM) and asking it to "fix the problem." However, this approach is often too broad. LLMs can struggle with excessive context or too many possible actions, leading to unreliable or nonsensical results. Conversely, just providing the error message and asking for a code snippet lacks the context and tools needed for the LLM to validate its own suggestions effectively.

The key is finding the right balance: giving the agent enough context and capability without overwhelming it. This is where Dagger shines.

1. The Core Agent: The `DebugTests` Dagger Function

The heart of the solution is a Dagger Function called DebugTests. Instead of giving the LLM free reign over the entire container filesystem, we define a constrained environment focused on the task. This is achieved using a custom Dagger Module within the project, let's call it Workspace.

The Workspace module encapsulates the specific actions the agent needs:

ReadFile(path string) (string, error): Reads the content of a specific file.WriteFile(path, content string) error: Writes new content to a file.ListFiles(path string) ([]string, error): Explores the directory structure.RunTests() (string, error): Executes the project's test suite (or linters) and returns the output.RunLint() (string, error): Executes the project's linters and returns the output.

Crucially, because my project is already Daggerized, the RunTests and RunLint functions within this Workspace module simply call the existing Dagger Functions that developers and CI already use! We don't need to reimplement test execution logic for the agent; we just expose the existing Dagger actions as tools.

2. Creating the Agent Environment

Inside the DebugTests function, we instantiate this Workspace and create a Dagger Environment with it. This Environment effectively exposes the Workspace functions (like ReadFile, WriteFile, RunTests, RunLint) as "tools" that the LLM can call.

We then provide this Environment to the LLM, along with a carefully crafted prompt. The prompt instructs the LLM on its goal (fix the test/lint failures provided in the initial input), explains the available tools (the Workspace functions), and guides its reasoning process.

The agent then operates in a loop:

It receives the initial failure output.

It uses the

ReadFileandListFilestools to understand the relevant code.It hypothesizes a fix and uses

WriteFileto apply it.It uses

RunTestsorRunLintto check if the fix worked.If failures persist, it analyzes the new output and repeats the process.

Once all failures are resolved, the agent calculates the diff between the original and modified source code and returns it.

The DebugTests function orchestrates this entire interaction, returning the final, validated diff.

3. CI Integration: From Diff to Pull Request Suggestion

While DebugTests is the core agent logic, a second Dagger Function handles the CI integration. This function is triggered by the CI workflow specifically when the linting or testing stage fails.

It performs these steps:

Retrieves the pull request's source code using Dagger's Git capabilities.

Calls the

DebugTestsfunction, passing in the source code and the captured failure output.Receives the

diffcontaining the fixes from the agent.Uses the platform's API (e.g., GitHub API) to format and post this

diffas code suggestions on the relevant lines within the pull request.

These aren't just plain comments; they are actionable suggestions tied to specific code lines. The developer can review each change and click "Commit suggestion" to apply it directly, streamlining the fix process dramatically.

Summary: Smarter CI with Dagger and AI

By leveraging Dagger, we built an AI agent capable of automatically fixing linting and test failures. The key was designing a focused environment using a custom Workspace module, exposing specific capabilities (file I/O, test execution) as tools for the LLM. Because the project's testing and linting were already defined as Dagger Functions, the agent could reuse them directly, showcasing Dagger's power for composition and reuse.

Integrating this agent into CI transforms the developer experience. Instead of manually debugging and fixing routine errors, developers receive validated, ready-to-commit suggestions directly in their pull requests. This saves time, reduces context switching, and lets engineers focus on building features, while Dagger and AI handle the corrective busywork.

I'm excited to continue exploring this space and would love to hear about your experiences with AI agents in development workflows. Have you implemented similar approaches? What challenges have you encountered? Let's discuss in the Dagger Discord! You can check out the code from my demo here.

Join the community

Connect, learn, and share with fellow engineers and builders.